The journal pre-prof of the article “Machine Learning Based Feedback on Textual Student Answers in Large Courses” by Jan Philip Bernius, Stephan Krusche, and Bernd Brügge is now available as Open Access on ScienceDirect!

- Machine Learning Based Feedback on Textual Student Answers in Large Courses by Jan Philip Bernius, Stephan Krusche, and Bernd Brügge

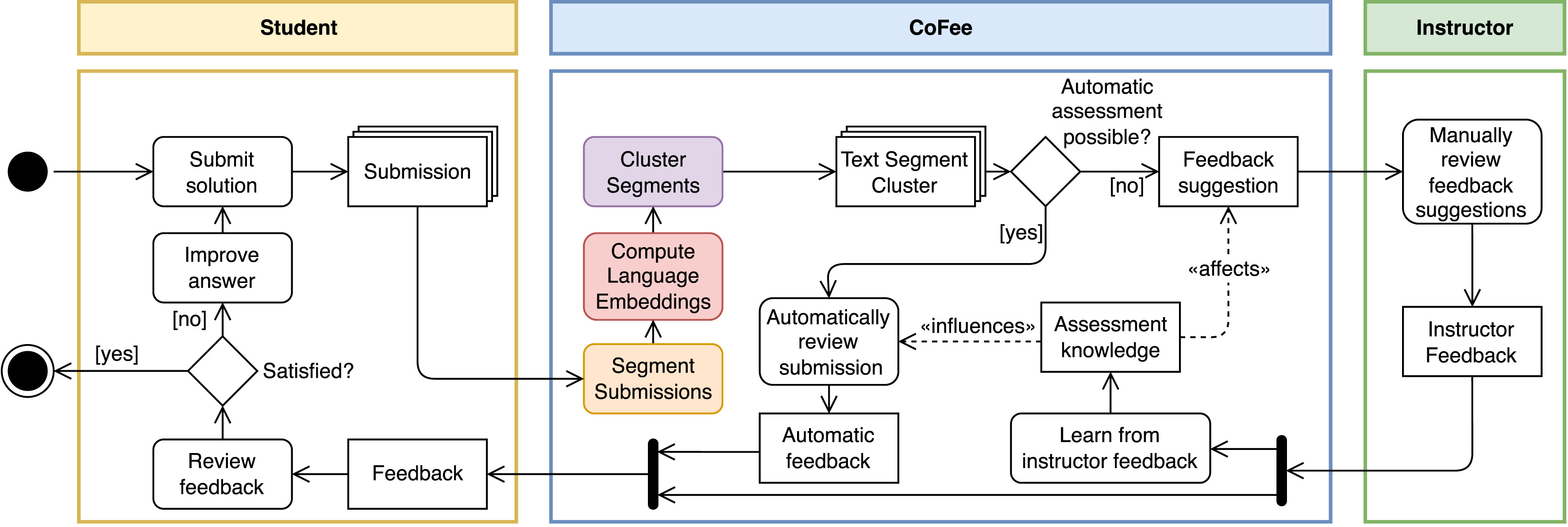

Abstract—Many engineering disciplines require problem-solving skills, which cannot be learned by memorization alone. Open-ended textual exercises allow students to acquire these skills. Students can learn from their mistakes when instructors provide individual feedback. However, grading these exercises is often a manual, repetitive, and time-consuming activity. The number of computer science students graduating per year has steadily increased over the last decade. This rise has led to large courses that cause a heavy workload for instructors, especially if they provide individual feedback to students. This article presents CoFee, a framework to generate and suggest computer-aided feedback for textual exercises based on machine learning. CoFee utilizes a segment-based grading concept, which links feedback to text segments. CoFee automates grading based on topic modeling and an assessment knowledge repository acquired during previous assessments. A language model builds an intermediate representation of the text segments. Hierarchical clustering identifies groups of similar text segments to reduce the grading overhead. We first demonstrated the CoFee framework in a small laboratory experiment in 2019, which showed that the grading overhead could be reduced by 85%. This experiment confirmed the feasibility of automating the grading process for problem-solving exercises. We then evaluated CoFee in a large course at the Technical University of Munich from 2019 to 2021, with up to 2, 200 enrolled students per course. We collected data from 34 exercises offered in each of these courses. On average, CoFee suggested feedback for 45% of the submissions. 92% (Positive Predictive Value) of these suggestions were precise and, therefore, accepted by the instructors.

- Workflow of automatic assessment of submissions to textual exercises based on the manual feedback of instructors. CoFee analyzes manual assessments and generates knowledge for the suggestion of computer-aided (automatic) feedback (UML activity diagram).

Citation

Machine Learning Based Feedback on Textual Student Answers in Large Courses.

Jan Philip Bernius,

Stephan Krusche, and

Bernd Brügge.

In: Computers and Education: Artificial Intelligence, Volume: 3.

June

2022.

doi: 10.1016/j.caeai.2022.100081

[BibTeX]